Saturday, December 28, 2013

Power analysis - "sample size by analogy"

Monday, December 23, 2013

Fitting Probability Distributions (in R)

What is Curve Fitting?

The purpose of curve fitting is to find parameter values for a function that best 'fit' a set of data points. One type of curve fitting you probably already know about, though perhaps not using these terms, is regression analysis.

For example, suppose we had one predictor and one criterion variable, and wanted to conduct a simple regression including an intercept term. Our regression equation would look like the following equation.

\[ Y_i= \beta_0 + \beta_1X_i + \epsilon_i \mbox{, where } \epsilon_i \stackrel{iid}{\sim} N(0,\sigma^2) \]

Regression determines \( b_0 \) and \( b_1 \), sample estimates for the population values \( \beta_0 \) and \( \beta_1 \), by minimizing the sum of squared residuals between \( Y_i \) and \( \hat{Y}_i \), where \( \hat{Y}_i=b_0 + b_1X_i \)

Probability Distribution Fitting Example

The purpose of fitting probability distributions is to model the probability or frequency of a process. For this tutorial, we're going to model probability estimates elicited from subjects guessing the age of a man in photograph. In other words, we're going to estimate a continuous subjective probability distribution using only a few observed estimates. It is imprtant to note that while we are directly modeling probabilities in this example, one could fit probability distributions across a variety of contexts.

Scenario

Assume we have asked participants to estimate three probability intervals \( \{p_1,p_2,p_3\} \) of the age of a man in a photograph, which we'll represent with \( A \). These intervals are determined by two cutpoints, that is two candidate ages, for the age of a man: \( \{a_1,a_2\} \):

\[ \begin{align*} p_1&=P(A \leq a_1) \\ p_2&=P(A \leq a_2) \\ p_3&=P(A > a_2) \\ \end{align*} \]

Suppose these candidate ages were \( {a_1=30,a_2=50} \), then in words, the we could elicit \( \{p_1,p_2,p_3\} \) with the following questions:

- What is the probability that the man is less than 30 years old? (\( p_1 \))

- What is the probability that the man is less than 50 years old? (\( p_2 \))

- What is the probability that the man is more than 50 years old? (\( p_3 \))

Assume that our participant gave responses of .3, .8, and .2 for the three questions above. We can express our three observed probability estimates as two values of a cumulative distribution function \( F_A(a) \). For this example we're using the normal distribution to represent the estimates, though there are many different probability distributions one could use depending on the properties of the data you are fitting.

\[ \begin{align*} F_A(a_1)&=P(A\leq a_1)=p_1=.3 \\ F_A(a_2)&=P(A\leq a_2)=p_2=.8 \\ \end{align*} \]

Now suppose we wanted to model how old a participant believed the man in photograph actually was. While we didn't ask this question, if we knew the true underlying distribution of \( A \), we could use its mean, \( E(A) \), as the subject's estimate (or median, that is \( Q_A(.5) \) where \( Q_A \) is the inverse cumulative, or quantile, distribution function for \( A \)).

While we don't know what the true distribution of \( A \) is, we can take a known distribution and find parameter values that provide the best fit between our observed values of \( A \) and the values predicted by a fitted distribution \( \hat{A} \). What constitutes 'best fit' is defined by whatever optimizing rule, or objective function, we choose to apply. A common rule is to minimize squared error between our observed and predicted values. Since in this case what we observed were probabilities, we will choose to minimize the squared error between the observed and predicted probabilities.

\[ \displaystyle\sum\limits_{i=1}^n (F_A(a_i)-F_\hat{A}(a_i,\theta))^2 \]

Where \( \theta \) is the vector of parameters for our fitted distribution. Since we're using a normal distribution in this example, \( \theta \) is the mean and variance.

\[ (F_A(a_1)-F_\hat{A}(a_1,\theta))^2+ (F_A(a_2)-F_\hat{A}(a_2,\theta))^2 \]

We already know \( F_A(a_1) \) and \( F_A(a_2) \) (.3 and .8 respectively) and we picked a normal distribution for \( \hat{A} \), where \( A \sim N(\theta) \) and \( \theta = \{\hat{\mu},\hat{\sigma}^2\} \).

\[ (.3-F_\hat{A}(a_1;{\hat{\mu},\hat{\sigma}^2}))^2+ (.8-F_\hat{A}(a_2;{\hat{\mu},\hat{\sigma}^2}))^2 \]

Now that we have selected a distribution to fit and an objective function, we need find the values for \( \hat{\mu} \) and \( \hat{\sigma}^2 \) that minimize our objective function. As the example R code below will demonstrate, these estimated mean will be approximately 37.7. Precisely how optimization routines work is beyond the scope of this tutorial, but generally, optimization routines like those in R take some user-defined starting values for \( \theta \) and then repeatedly evaluate the objective function with a different \( \theta \) until some decision rule is reached, for example \( n \) iterations with no decrease in value for the objective function. Because it is possible for these routines to get 'stuck' in local minima, one should fit the candidate distribution with a range of values for \( \theta \). For brevity's sake, we'll only use one set of starting values in this example.

Fitting One Subject's Judgements in R

For this example, assume we have asked one subject to estimate the probabilities that a man in a photograph is less than 30, between 30 and 50, and older than 50. The subject's observed responses are {.3,.8,.2}. We need to then convert his responses to cumulative probabilites.

# Assign quantile values to variable 'q'

q <- c(30,50)

# Assign subject's observed probability estimates to variable 'p'

p <- c(.3,.8,.2)

# Retain first 2 cumulative probabilities

# the last last probability is redundant

p.cum <- p[1:2]

Now we need to pick a distribution to fit, starting parameter values for that distribution, and define our objective function. We'll use the normal for this example, and a mean of 40 and variance of 10 for our starting values. Remember, you'll typically want to use many different starting values in order to avoid local minima.

# Define Optimization Function

optimFn <- function(theta) sum(abs(p.cum - pnorm(q, theta[1], theta[2]))^2)

# This is an R version of the same function described in the math section

# pnorm() is the distribution function for the normal distribution theta[1]

# and theta[2] are values for the mean and variance that will be passed to

# the function

# Define starting values

mu <- 40

sigma <- sqrt(10)

# Fit the distribution

A.hat <- optim(c(mu, sigma), optimFn)

# Print the parameter values (mean and standard deviation)

A.hat$par

## [1] 37.68 14.64

So the mean of the distribution we just fit to our subjects responses is 37.6782, with a standard deviation 14.6419. If you wanted to check the fit with a plot, you could do that as well. Since we are fitting a two parameter distribution to two points, the curve should almost always pass exactly through the points.

fitted.mean <- A.hat$par[1]

fitted.sd <- A.hat$par[2]

# CDF

plot(x <- seq(fitted.mean - 3 * fitted.sd, fitted.mean + 3 * fitted.sd, by = 1),

pnorm(x, fitted.mean, fitted.sd), type = "l", col = 2, xlab = "Age of Man in Picture",

ylab = "Probability")

points(q, p.cum)

legend("topleft", col = c("red"), lty = 1, legend = c("Fitted Distribution"))

Sunday, December 15, 2013

Comparing Models using BIC and AIC

Sunday, December 8, 2013

Wednesday, December 4, 2013

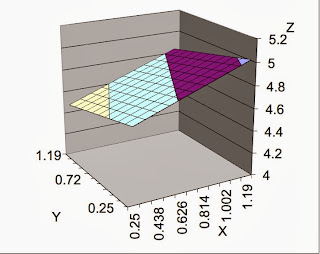

Polynomial Regression for Different Scores

- Can patients provide self-psychological evaluations that agree with psychology professionals’ ratings?

- How well do the attributes of teachers fit the needs or desires of the students?

- Are values of the supervisors of an organization match the values of the subordinates?

- What are the degrees of similarity between perceived stigma of mental ill patients and their caregivers?

- Fit in a linear model by using two construct measures (X & Y) as predictors and Z is the outcome. Calculate R Square and if R Square is not significant, we stop the procedure.

- If the R square is significant, then we added quadratic terms X2 &Y2 and interaction terms XY as set into the model. We test the increment in R Square and if the increment in R Square is not significant, we return back to the linear model.

- If the increment in R Square is significant, we added cubic terms X3, X2Y, XY2 andY3. Again, we test the increment in R Square and if the increment in R Square is not significant, we return back to the quadratic model.

Quadratic:

Reference: